|

Size: 8737

Comment:

|

Size: 20983

Comment:

|

| Deletions are marked like this. | Additions are marked like this. |

| Line 1: | Line 1: |

| = Situations with more than two variables of interest = When considering the relationship among three or more variables, an '''interaction''' may arise. Interactions describe a situation in which the simultaneous influence of two variables on a third is not additive. Most commonly, interactions are considered in the context of '''Multiple Regression''' analyses, but they may also be evaluated using '''Two-Way ANOVA'''. * Example Problem * Two-Way ANOVA * Multiple Regression |

= Situations with Two or More Variables of Interest = When considering the relationship among three or more variables, an '''interaction''' may arise. Interactions describe a situation in which the simultaneous influence of two variables on a third is not additive. Most commonly, interactions are considered in the context of '''multiple regression''' analyses, but they may also be evaluated using '''two-way ANOVA'''. * [[MoreThanTwoVariables#An Example Problem|Example Problem]] * [[MoreThanTwoVariables#Two-Way ANOVA|Two-Way ANOVA]] * [[MoreThanTwoVariables#Tukey's HSD (Honestly Significant Difference) Test|Tukey's HSD Test]] * [[MoreThanTwoVariables#Multiple Regression|Multiple Regression]] |

| Line 10: | Line 11: |

| Suppose we are interested in studying the effects of cocaine on sleep. We might design an experiment to simultaneously test whether both the use of cocaine and the duration of usage affect the number of hours a squirrel will sleep in a night. We might give half of the squirrels we test cocaine, and the other half a placebo substance (the ''substance'' variable). And we might vary the duration of usage by administering cocaine or placebo for one of two possible durations before test, 4 weeks or 12 weeks (the ''duration'' variable). We can then consider the average treatment response (e.g. number of hours slept) for each squirrel, as a function of the treatment combination that was administered (e.g. substance and duration). The following table shows one possible situation: ||||||||HOURS SLEPT IN SINGLE NIGHT|| || '''4-Week Placebo (Control)''' || '''4-Week Cocaine''' || '''12-Week Placebo (Control)''' || '''12-Week Cocaine''' || |

To determine if prenatal exposure to cocaine alters dendritic spine density within prefrontal cortex, 20 rats were equally divided between treatment groups that were prenatally exposed to either cocaine or placebo. Further, because any effect of prenatal drug exposure might be evident at one age but not another, animals within each treatment group were further divided into two groups. One sub-group was studied at 4 weeks of age and the other was studied at 12 weeks of age. Thus, our independent variables are treatment (prenatal drug/placebo exposure) and age (lets say 4 and 12 weeks of age), and our dependent variable is spine density. The following table shows one possible outcome of such a study: ||||||||DENDRITIC SPINE DENSITY|| || '''4-Week Control''' || '''4-Week Cocaine''' || '''12-Week Control''' || '''12-Week Cocaine''' || |

| Line 20: | Line 22: |

| There are '''three null hypotheses''' we may want to test. The first two test the effects of each factor under investigation: * H,,01,,: Both substance groups sleep for the same number of hours on average. * H,,02,,: Both treatment duration groups sleep for the same number of hours on average. And the third tests for an interaction between these two factors: * H,,03,,: The two factors are independent or there is no interaction effect. |

There are '''three null hypotheses''' we may want to test. The first two test the effects of each independent variable (or factor) under investigation, and the third tests for an interaction between these two factors: * ''H,,01,,'': Prenatal treatment substance (control or cocaine) has no effect on dendritic spine density in rats. We can state this more formally as {{attachment:mu.gif|mu}}'',,Control,,'' = {{attachment:mu.gif|mu}}'',,Cocaine,,'', where {{attachment:mu.gif|mu}}'',,Control,,'' and {{attachment:mu.gif|mu}}'',,Cocaine,,'' are the population mean spine densities of rats who ''were not'' and ''were'' prenatally exposed to cocaine, respectively. * ''H,,02,,'': Age has no effect on dendritic spine density. Or, more formally, {{attachment:mu.gif|mu}}'',,4-Week,,'' = {{attachment:mu.gif|mu}}'',,12-Week,,'', where {{attachment:mu.gif|mu}}'',,4-Week,,'' and {{attachment:mu.gif|mu}}'',,12-Week,,'' are the population mean spine densities of rats at the ages of 4- and 12-weeks, respectively. * ''H,,03,,'': The two factors (treatment and age) are independent; i.e., there is no interaction effect. Or, {{attachment:mu.gif|mu}}'',,4-Week, Control,,'' = {{attachment:mu.gif|mu}}'',,4-Week, Cocaine,,'' = {{attachment:mu.gif|mu}}'',,12-Week, Control,,'' = {{attachment:mu.gif|mu}}'',,12-Week, Cocaine,,'', where each {{attachment:mu.gif|mu}} represents the population mean spine density of rats at the labeled age and prenatal treatment condition. |

| Line 30: | Line 38: |

| A two-way ANOVA is an analysis technique that quantifies how much of the variance in a sample can be accounted for by each of two categorical variables and their interactions. '''Step 1''' is to compute the '''group means''' (for each cell, row, and column): |

A two-way ANOVA is an analysis technique that quantifies how much of the variance in a sample can be accounted for by each of two categorical variables and their interactions. Note that different rats are used in each of the four experimental groups, so a standard two-way ANOVA should be used. If the same rats were used in each experimental condition, one would want to use a two-way repeated-measures ANOVA. '''Step 1''' is to compute '''group means''' (for each cell group), '''row and column means''', and the '''grand mean''' (for all observations in the whole experiment): |

| Line 36: | Line 43: |

| || || '''4-Week''' || '''12-Week''' || '''''All Durations''''' || || '''Placebo''' || 7 || 9.7|| ''8.35'' || |

|| || '''4-Week''' || '''12-Week''' || '''''All Ages''''' || || '''Control''' || 7 || 9.7|| ''8.35'' || |

| Line 39: | Line 46: |

| || '''''All Substances''''' || ''5.95'' || ''7.2'' || ''6.575'' || '''Step 2''' is to calculate the sum of squares (SS) for each group (cell) using the following formula: {{{#!latex \[ \sum_{i=1} (x_{i,g} - \overline{X}_g)^2 \] }}} where <<latex($x_{i,g}$)>> is the i'th measurement for group g, and <<latex($\overline{X}_g$)>> is the overall group mean for group g. |

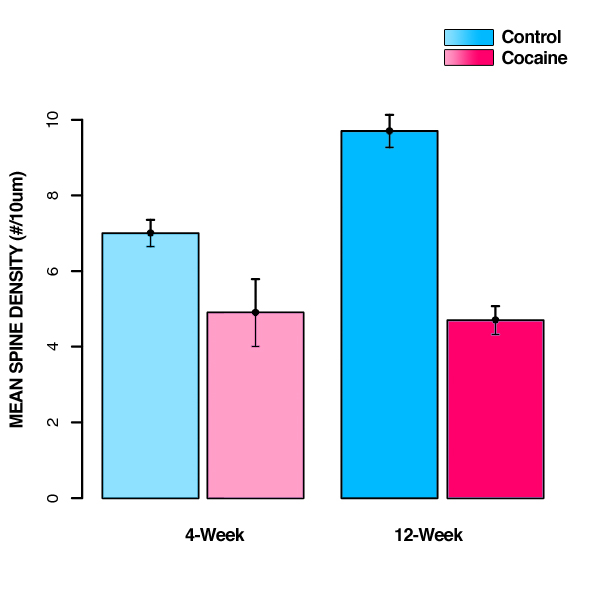

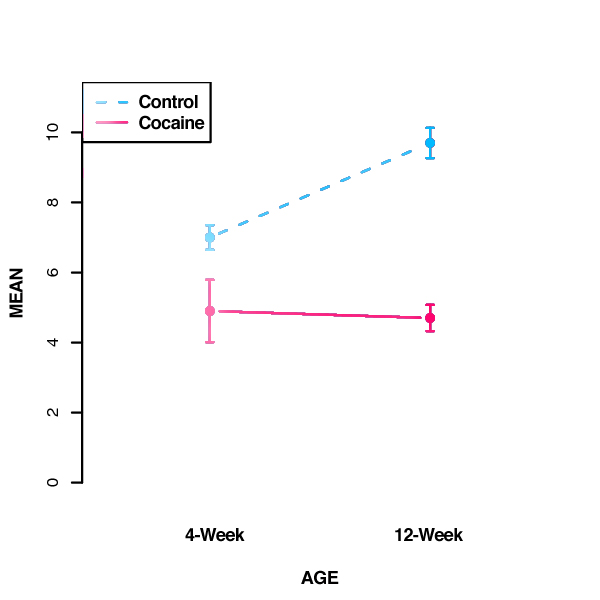

|| '''''All Prenatal Exposures''''' || ''5.95'' || ''7.2'' || ''6.575'' || '''''Take a moment to define your variables'''''<<BR>> Before we go any further, we should define some variables that we'll use to complete the computations needed for the rest of the analysis: ''n'' = number of observations in each group (here, it's 5 since there are 5 subjects per group) <<BR>> ''N'' = total number of observations in the whole experiment (here, 20) <<BR>> ''r'' = number of rows (here 2, one for each age group) <<BR>> ''c'' = number of columns (here 2, one for each treatment substance condition <<BR>> {{attachment:xbar.gif|X-bar}}'',,g,,'' = mean of a particular cell group ''g'' (for example, {{attachment:xbar.gif|X-bar}}'',,12-Week, Control,,'' = ''9.7''). <<BR>> {{attachment:xbar.gif|X-bar}}'',,R,,'' = mean of a particular row ''R'' (for example, {{attachment:xbar.gif|X-bar}}'',,4-Week,,'' = ''5.95'')<<BR>> {{attachment:xbar.gif|X-bar}}'',,C,,'' = mean of a particular column ''C'' (for example, {{attachment:xbar.gif|X-bar}}'',,Cocaine,,'' = ''4.8'')<<BR>> And finally, to refer to the '''''grand mean''''' (the overall mean of all observations in the experiment), we'll simply use the notation {{attachment:xbar.gif|X-bar}} (here, {{attachment:xbar.gif|X-bar}} = 6.575). Now that we've calculated all of our means, it would be helpful to also plot the mean data. (It's easier to understand it that way!) {{attachment:2-way_barplot.jpg|Means by group|width=450}} '''Step 2''' is to calculate the sum-of-squares for each individual group (''SS,,g,,'') using this: {{{#!latex \[ SS = \sum_{i=1} (x_{i,g} - \overline{X}_g)^2 \] }}} where ''x,,i,g,,'' is the ''i''th measurement for group ''g'', and {{attachment:xbar.gif|X-bar}}'',,g,,'' is the mean of group ''g''. Remember here that when we say "group", we are referring to individual cells (not rows or columns, which we'll deal with next): |

| Line 53: | Line 77: |

| 4-Week Placebo: <<BR>> {7, 8, 6, 7, 6.5}, <<latex($\overline{X}_{4-week, placebo}$)>> = 7<<BR>> <<latex($SS_{4-week, placebo}$)>> = (7-7)^2^ + (8-7)^2^ + (6-7)^2^ + (7-7)^2^ + (6.5-7)^2^ = '''2.25'''<<BR>><<BR>> |

4-Week Control: <<BR>> {7.5, 8, 6, 7, 6.5}, {{attachment:xbar.gif|X-bar}}'',,4-week, control,,'' = 7<<BR>> ''SS,,4-week, control,,'' = (7.5-7)^2^ + (8-7)^2^ + (6-7)^2^ + (7-7)^2^ + (6.5-7)^2^ = '''2.5'''<<BR>><<BR>> |

| Line 58: | Line 82: |

| {5.5, 3.5, 4.5, 6, 5}, <<latex($\overline{X}_{4-week, cocaine}$)>> = 4.9<<BR>> <<latex($SS_{4-week, cocaine}$)>> = (5.5-4.9)^2^ + (3.5-4.9)^2^ + (4.5-4.9)^2^ + (6-4.9)^2^ + (5-4.9)^2^ = '''3.7'''<<BR>><<BR>> 12-Week Placebo: <<BR>> {8, 10, 13, 9, 8.5}, <<latex($\overline{X}_{12-week, placebo}$)>> = 9.7<<BR>> <<latex($SS_{12-week, placebo}$)>> = (8-9.7)^2^ + (10-9.7)^2^ + (13-9.7)^2^ + (9-9.7)^2^ + (8.5-9.7)^2^ = '''15.8'''<<BR>><<BR>> |

{5.5, 3.5, 4.5, 6, 5}, {{attachment:xbar.gif|X-bar}}'',,4-week, cocaine,,'' = 4.9<<BR>> ''SS,,4-week, cocaine,,'' = (5.5-4.9)^2^ + (3.5-4.9)^2^ + (4.5-4.9)^2^ + (6-4.9)^2^ + (5-4.9)^2^ = '''3.7'''<<BR>><<BR>> 12-Week Control: <<BR>> {8, 10, 13, 9, 8.5}, {{attachment:xbar.gif|X-bar}}'',,12-week, control,,'' = 9.7<<BR>> ''SS,,12-week, control,,'' = (8-9.7)^2^ + (10-9.7)^2^ + (13-9.7)^2^ + (9-9.7)^2^ + (8.5-9.7)^2^ = '''15.8'''<<BR>><<BR>> |

| Line 66: | Line 90: |

| {5, 4.5, 4, 6, 4}, <<latex($\overline{X}_{12-week, cocaine}$)>> = 4.7<<BR>> <<latex($SS_{12-week, cocaine}$)>> = (5-4.7)^2^ + (4.5-4.7)^2^ + (4-4.7)^2^ + (6-4.7)^2^ + (4-4.7)^2^ = '''2.8'''<<BR>><<BR>> '''Step 3''' is to calculate the between-groups sum of squares(''SS,,B,,''):<<BR>> {{{#!latex \[ n \cdot \sum_{g} (\overline{X}_g - \overline{X})^2 \] }}} where n is the number of subjects in each group, <<latex($\overline{X}_g$)>> is the mean for group g, and <<latex($\overline{X}$)>> is the overall mean (across groups). <<latex($SS_{B}$)>> = <<latex($n$)>> [( <<latex($\overline{X}_{4-week, placebo}$)>> - <<latex($\overline{X}$)>> )^2^ + ( <<latex($\overline{X}_{4-week, cocaine}$)>> - <<latex($\overline{X}$)>>)^2^ + ( <<latex($\overline{X}_{12-week, placebo}$)>> - <<latex($\overline{X}$)>> )^2^ + ( <<latex($\overline{X}_{12-week, cocaine}$)>> - <<latex($\overline{X}$)>>)^2^]<<BR>> |

{5, 4.5, 4, 6, 4}, {{attachment:xbar.gif|X-bar}}'',,12-week, cocaine,,'' = 4.7<<BR>> ''SS,,12-week, cocaine,,'' = (5-4.7)^2^ + (4.5-4.7)^2^ + (4-4.7)^2^ + (6-4.7)^2^ + (4-4.7)^2^ = '''2.8'''<<BR>><<BR>> '''Step 3''' is to calculate the ''between-groups'' sum-of-squares (''SS,,B,,''):<<BR>> {{{#!latex \[ SS_B = n \cdot \sum_{g} (\overline{X}_{g} - \overline{X})^2 \] }}} where ''n'' is the number of observations per group, {{attachment:xbar.gif|X-bar}}'',,g,,'' is the mean for group ''g'', and {{attachment:xbar.gif|X-bar}} is the grand mean. So... '''''SS,,B,,''''' = ''n'' [( {{attachment:xbar.gif|X-bar}}'',,4-week, control,,'' - {{attachment:xbar.gif|X-bar}} )^2^ + ( {{attachment:xbar.gif|X-bar}}'',,4-week, cocaine,,'' - {{attachment:xbar.gif|X-bar}})^2^ + ( {{attachment:xbar.gif|X-bar}}'',,12-week, control,,'' - {{attachment:xbar.gif|X-bar}} )^2^ + ( {{attachment:xbar.gif|X-bar}}'',,12-week, cocaine,,'' - {{attachment:xbar.gif|X-bar}})^2^]<<BR>> |

| Line 87: | Line 109: |

| Now, '''Step 4''' , we'll calculate the sum-of-squares within groups (<<latex($SS_{W}$)>>):<<BR>> <<latex($SS_{W}$)>> = <<latex($SS_{4-week, placebo}$)>> + <<latex($SS_{4-week, cocaine}$)>> + <<latex($SS_{12-week, placebo}$)>> + <<latex($SS_{12-week, cocaine}$)>><<BR>> = 2.25 + 3.7 + 15.8 + 2.8<<BR>> = '''24.55'''<<BR>><<BR>> <<latex($df_{w}$)>> = <<latex($N - rc$)>><<BR>> |

'''Step 4''' pertains to ''within-group'' variance. Here, we'll calculate the sum-of-squares, degrees of freedom, and mean square error ''within groups''. First, compute the within-groups sum-of-squares (''SS,,W,,'') by summing the sum-of-squares you computed for each group in Step 1: {{{#!latex \[ SS_W = \sum_{g} SS_g \] }}} where ''SS,,g,,'' is the sum-of-squares for group ''g''. So... <<BR>> '''''SS,,W,,''''' = ''SS,,4-week, control,,'' + ''SS,,4-week, cocaine,,'' + ''SS,,12-week, control,,'' + ''SS,,12-week, cocaine,,''<<BR>> = 2.5 + 3.7 + 15.8 + 2.8<<BR>> = '''24.8'''<<BR>><<BR>> Next, calculate the within-groups ''degrees of freedom'' (''df,,W,,''), using this: ''df,,W,, = N - rc'' where ''N''is the total number of observations in the experiment, ''r'' is the number of rows, and ''c'' is the number of columns. So... <<BR>> '''''df,,W,,''' = N - rc'' <<BR>> |

| Line 96: | Line 133: |

| <<latex($s_{w}^2$)>> = ''SS,,W,,'' / ''df,,W,,''<<BR>> = 24.55 / 16<<BR>> = '''1.534375'''<<BR>><<BR>> For '''Step 5''', we'll calculate the ''SS,,R,,'':<<BR>> '''''SS,,R,,''''' = ''n'' [( ''M,,Placebo,,'' - ''M,,Group,,'' )^2^ + ( ''M,, Cocaine,,'' - ''M,,Group,,'')^2^]<<BR>> |

And finally, we compute the within-groups ''mean square error'' ('''''s,,W,,^2^''''') by dividing ''SS,,W,,''by ''df,,W,,'':<<BR>> '''''s,,W,,^2^''''' = ''SS,,W,,'' / ''df,,W,,''<<BR>> = 24.8 / 16<<BR>> = '''1.55'''<<BR>> Note that ''SS,,W,,'' is also known as the "residual" or "error" since it quantifies the amount of variability after the condition means are taken into account. The degrees of freedom here are ''N - rc'' because there are ''N'' data points, but the number of means fit is ''r*c'', giving a total of ''N - rc'' variables that are free to vary. <<BR>><<BR>> '''Step 5''' pertains to ''row'' variance. Here, we'll calculate all the same stuff we did in that last step (the sum-of-squares, degrees of freedom, and mean square error), but for the ''rows''. First, compute the row sum-of-squares (''SS,,R,,'') by summing the sum-of-squares you computed for each row in Step 1: {{{#!latex \[ SS_R = n_R \cdot \sum_{R} (\bar{X}_R - \bar{X})^2 \] }}} where ''n,,R,,'' is the number of observations per row, {{attachment:xbar.gif|X-bar}}'',,R,,'' is the mean of row ''R'', and {{attachment:xbar.gif|X-bar}} is the grand mean. '''''SS,,R,,''''' = ''n,,R,,'' [( {{attachment:xbar.gif|X-bar}}'',,control,,'' - {{attachment:xbar.gif|X-bar}} )^2^ + ( {{attachment:xbar.gif|X-bar}}'',,cocaine,,'' - {{attachment:xbar.gif|X-bar}})^2^]<<BR>> |

| Line 108: | Line 157: |

| '''''df,,R,,''''' = ''r'' - 1<<BR>> | '''''df,,R,,''''' = ''r'' - 1, where ''r'' is the number of rows.<<BR>> |

| Line 111: | Line 160: |

And, just as before, divide the sum-of-squares by the degrees of freedom to get the mean square error for the rows: |

|

| Line 116: | Line 167: |

| In '''Step 6''', we calculate the ''SS,,C,,'':<<BR>> '''''SS,,C,,''''' = ''n'' [( ''M,,4-Week,,'' - ''M,,Group,,'' )^2^ + ( ''M,, 12-Week,,'' - ''M,,Group,,'')^2^]<<BR>> |

In '''Step 6''', we calculate all of the variance metrics again for the ''columns'' (''SS,,C,,'', ''df,,C,,'', ''s,,C,,^2^''):<<BR>> {{{#!latex \[ SS_C = n_C \cdot \sum_{C} (\overline{X}_C - \overline{X})^2 \] }}} where ''n,,C,,'' is the number of observations per column, {{attachment:xbar.gif|X-bar}}'',,C,,'' is the mean of column ''C'', and {{attachment:xbar.gif|X-bar}} is the grand mean. So... '''''SS,,C,,''''' = ''n,,C,,'' ( {{attachment:xbar.gif|X-bar}}'',,4-week,,'' - {{attachment:xbar.gif|X-bar}} )^2^ + ( {{attachment:xbar.gif|X-bar}}'',,12-week,,'' - {{attachment:xbar.gif|X-bar}} )^2^]<<BR>> |

| Line 124: | Line 184: |

| '''''df,,C,,''''' = ''c'' - 1<<BR>> | '''''df,,C,,''''' = ''c'' - 1, where ''c'' is the number of columns.<<BR>> |

| Line 133: | Line 193: |

| In '''Step 7''', calculate the ''SS,,RC,,'':<<BR>> | '''Step 7''' is to calculate the same three variance metrics the ''row-column interaction'' (''SS,,RC,,'', ''df,,RC,,'', ''s,,RC,,^2^''). To calculate''SS,,RC,,'', simply sum the sum-of-squares you calculated for between-groups, rows, and columns (''SS,,B,,'', ''SS,,R,,'', and ''SS,,C,,'', respectively): |

| Line 139: | Line 199: |

| And, for ''df,,RC,,'' ... | |

| Line 143: | Line 204: |

| Finally, ''s,,RC,,^2^'': | |

| Line 147: | Line 209: |

| Now, in '''Step 8''', we'll calculate the ''SS,,T,,'':<<BR>> | '''Step 8''' is to calculate the ''total'' variance metrics for the experiment. As always, start by computing the sum-of-squares (''SS,,T,,''), which is done here by summing the between-groups, within-groups, row, column, and interaction sum-of-squares you computed in steps 3-7:<<BR>> |

| Line 150: | Line 212: |

| = 81.3375+ 24.55 + 63.0125 + 7.8125 + 10.5125<<BR>> = 187.225<<BR>><<BR>> '''''df,,T,,''''' = ''N'' - 1<<BR>> |

= 81.3375 + 24.8 + 63.0125 + 7.8125 + 10.5125<<BR>> = 187.475<<BR>><<BR>> '''''df,,T,,''''' = ''N'' - 1, where ''N'' is the total number of observations in the experiment.<<BR>> |

| Line 157: | Line 219: |

| At '''Step 9''', we calculate the ''F'' values: | '''Step 9''' is calculating the ''F''-values (''F,,obt,,'') required to determine significance. For two-way ANOVAs, you'll compute 3 ''F''-values: one for ''rows'', one for ''columns'', and one for the ''interaction'' between the two. Each ''F''-value is computed by subtracting the mean square of the relevant dimension (''R'', ''C'', or ''RC'') by the within-groups mean square: |

| Line 160: | Line 222: |

| = 63.0125 / 1.534375<<BR>> = '''41.06721'''<<BR>><<BR>> |

= 63.0125 / 1.55<<BR>> = '''40.65323'''<<BR>><<BR>> |

| Line 164: | Line 226: |

| = 7.8125 / 1.534375<<BR>> = '''5.09165'''<<BR>><<BR>> |

= 7.8125 / 1.55<<BR>> = '''5.040323'''<<BR>><<BR>> |

| Line 168: | Line 230: |

| = 10.5125 / 1.534375<<BR>> = '''6.851324'''<<BR>><<BR>> And, finally, at '''Step 10''', we can organize all of the above into a table, along with the appropriate '''F,,CRIT,,''' values (looked up in a table like [[http://www.medcalc.org/manual/f-table.php|this one]]) that we'll use for comparison and interpretation of our computations: '''F,,CRIT,,''' (1, 16) ,,α=0.5,, = '''4.49''' |

= 10.5125 / 1.55<<BR>> = '''6.782258'''<<BR>><<BR>> '''Step 10''' is organizing all of the above calculations into a table, along with the appropriate '''''F,,crit,,''''' values (looked up in a table like [[http://www.medcalc.org/manual/f-table.php|this one]]). Then we'll compare each''F,,obt,,'' value we computed to the ''F,,crit,,'' values we looked up to draw our conclusions: '''''F,,crit,,''''' (1, 16) ,,α=0.5,, = '''4.49''' |

| Line 177: | Line 239: |

| || rows || 63.0125 || 1 || 63.0125 || 41.06721 || 4.49 || ''p'' < 0.05 || || columns || 7.8125 || 1 || 7.8125 || 5.09165 || 4.49 || ''p'' < 0.05 || || r * c || 10.5125 || 1 || 10.5125 || 6.851324 || 4.49 || ''p'' < 0.05 || || within || 24.55 || 16 || 1.534375 || -- || -- || -- || || total || 187.225 || 19 || -- || -- || -- || -- || [INTERPRETATION HERE] |

|| rows || 63.0125 || 1 || 63.0125 || 40.65323 || 4.49 || ''p'' < 0.05 || || columns || 7.8125 || 1 || 7.8125 || 5.040323 || 4.49 || ''p'' < 0.05 || || r * c || 10.5125 || 1 || 10.5125 || 6.782258 || 4.49 || ''p'' < 0.05 || || within || 24.8 || 16 || 1.55 || -- || -- || -- || || total || 187.475 || 19 || -- || -- || -- || -- || Both variables (treatment and age) are significant, as indicated by the fact that ''F,,obt,,'' > ''F,,crit,,'' for both. Thus, we can reject ''H,,01,,'' and ''H,,02,,'' and conclude that dendritic spine density is affected by prenatal cocaine exposure and age. The interaction between the two factors (''r * c'') is also significant. Thus, we can also reject ''H,,03,,'' and conclude there is a significant interaction between treatment and age. To further interpret these results, we can plot the group means as follows: {{attachment:2wayanova-plot.jpg|Means by group|width=450}} '''NOTE''': Remember that the statistics provided by the ANOVA quantify the effect of each factor (in this case, treatment and age). These statistics do not compare individual condition means, such as whether ''4-week control'' differs from ''12-week control''. If ''k'' = the number of groups, the number of possible comparisons is ''k'' * (''k''-1) / 2. In the above example, we have 4 groups, so there are (4*3)/2 = 6 possible comparisons between these group means. Statistical testing of these individual comparisons requires a ''post-hoc analysis'' that corrects for experiment-wise error rate. If all possible comparisons are of interest, the [[Tukey's test| Tukey's HSD (Honestly Significant Difference) Test]] is commonly used.<<BR>><<BR>> === Tukey's HSD (Honestly Significant Difference) Test === Tukey's test is a single-step, multiple-comparison statistical procedure often used in conjunction with an ANOVA to test which group means are significantly different from one another. It is used in cases where group sizes are equal (the Tukey-Kramer procedure is used if group sizes are unequal) and it compares all possible pairs of means. Note that the Tukey's test formula is very similar to that of the ''t''-test, except that it corrects for experiment-wise error rate. (When there are multiple comparisons being made, the probability of making a [[http://en.wikipedia.org/wiki/Type_I_and_type_II_errors|type I error]] (rejecting a true null hypothesis) increases, so Tukey's test corrects for this.) The formula for a Tukey's test is: {{{#!latex \[ q_{obt} = (Y_{A} - Y_{B}) / \sqrt{ s_{W}^2 / n} \] }}} where ''Y'',,A,, is the larger of the two means being compared, ''Y'',,B,, is the smaller,''s,,W,,^2^'' is the mean squared error within, and ''n'' is the number of data points within each group . Once computed, the ''q,,obt,,'' value is compared to a ''q''-value from the ''q'' distribution. If the ''q,,obt,,'' value is ''larger'' than the ''q,,crit,,'' value from the distribution, the two means are significantly different. So, if we wanted to use a Tukey's test to determine whether ''4-week control'' significantly differs from ''12-week control'', we'd calculate it as follows ''q,,obt,,'' = {{attachment:xbar.gif|X-bar}}'',,12-week, control,,'' - {{attachment:xbar.gif|X-bar}}'',,4-week, control,,'' / <<latex($\sqrt{ s_{W}^2 / n}$)>><<BR>> = 9.7 - 7 / <<latex($\sqrt{ 1.55 / 5}$)>><<BR>> = 9.7 - 7 / <<latex($\sqrt{ 1.55 / 5}$)>><<BR>> = 2.7 / 0.5567764<<BR>> = '''4.849343'''<<BR>> The ''q,,crit,,'' value may be looked up in a chart (like [[http://www.stat.duke.edu/courses/Spring98/sta110c/qtable.html|this one]]) using the appropriate values for ''k'' (which represents the number of group means, so 4 here) and ''df,,w,,'' (16). So here, ''q,,crit,,'' (4, 16) ,,α=0.5,,= 4.05. Since ''q,,obt,,'' > ''q,,crit,,'' (4.85 > 4.05), we can conclude that the two means '''are''', in fact, (honestly) significantly different. <<BR>><<BR>> Additional group comparisons reveal that spine density does not change significantly with age in the cocaine-exposed group, but is significantly greater in the control group as compared to cocaine-exposed animals at both 4 and 12 weeks of age. Thus, the effect of prenatal drug exposure is magnified with time due to a developmental increase in spine density that occurs only in the control group. '''IMPORTANT NOTE''': There are various post-hoc tests that can be used, and the choice of which method to apply is a controversial area in statistics. The different tests vary in how conservative they are: more conservative tests are less powerful and have a lower risk of a type I error (rejecting a true null hypothesis), however this comes at the cost of increasing the risk of a type II error (incorrectly accepting the null hypothesis). Some common methods, listed in order of decreasing power, are: Fisher’s LSD, Newman-Keuls, Tukey HSD, Bonferonni, Scheffé. The following provides some guidelines for choosing an appropriate post-hoc procedure. * If all pairwise comparisons are truly relevant, the Tukey method is recommended.<<BR>> * If it is most reasonable to compare all groups against a single control, then the Dunnett test is recommended.<<BR>> * If only a subset of the pairwise comparisons are relevant, then the Bonferroni method is often utilized for those selected comparisons. For example, in the present example one would likely not be interested in comparing the ''4-week control'' to the ''12-week cocaine'' group, or the ''12-week control'' to the ''4-week cocaine'' group. |

| Line 186: | Line 285: |

| [BASIC INFO ABOUT MULTIPLE REGRESSION] | Another analysis technique you could use is a multiple regression. In multiple regression, we find coefficients for each group such that we are able to best predict the group means. The multiple regression computes standard errors on the coefficients, meaning that we can determine if a coefficient is significantly different from zero. On this simple example, multiple regression will give identical answers to ANOVA, but in more complex cases, multiple regression is a more powerful technique that allows you to include additional nuisance predictors, that the analysis controls for before testing for significance of your independent variables. <<BR>><<BR>> [[FrontPage|Go back to the Homepage]] |

Situations with Two or More Variables of Interest

When considering the relationship among three or more variables, an interaction may arise. Interactions describe a situation in which the simultaneous influence of two variables on a third is not additive. Most commonly, interactions are considered in the context of multiple regression analyses, but they may also be evaluated using two-way ANOVA.

An Example Problem

To determine if prenatal exposure to cocaine alters dendritic spine density within prefrontal cortex, 20 rats were equally divided between treatment groups that were prenatally exposed to either cocaine or placebo. Further, because any effect of prenatal drug exposure might be evident at one age but not another, animals within each treatment group were further divided into two groups. One sub-group was studied at 4 weeks of age and the other was studied at 12 weeks of age. Thus, our independent variables are treatment (prenatal drug/placebo exposure) and age (lets say 4 and 12 weeks of age), and our dependent variable is spine density. The following table shows one possible outcome of such a study:

DENDRITIC SPINE DENSITY |

|||

4-Week Control |

4-Week Cocaine |

12-Week Control |

12-Week Cocaine |

7.5 |

5.5 |

8.0 |

5.0 |

8.0 |

3.5 |

10.0 |

4.5 |

6.0 |

4.5 |

13.0 |

4.0 |

7.0 |

6.0 |

9.0 |

6.0 |

6.5 |

5.0 |

8.5 |

4.0 |

There are three null hypotheses we may want to test. The first two test the effects of each independent variable (or factor) under investigation, and the third tests for an interaction between these two factors:

H01: Prenatal treatment substance (control or cocaine) has no effect on dendritic spine density in rats.

We can state this more formally as  Control =

Control =  Cocaine, where

Cocaine, where  Control and

Control and  Cocaine are the population mean spine densities of rats who were not and were prenatally exposed to cocaine, respectively.

Cocaine are the population mean spine densities of rats who were not and were prenatally exposed to cocaine, respectively.

H02: Age has no effect on dendritic spine density.

Or, more formally,  4-Week =

4-Week =  12-Week, where

12-Week, where  4-Week and

4-Week and  12-Week are the population mean spine densities of rats at the ages of 4- and 12-weeks, respectively.

12-Week are the population mean spine densities of rats at the ages of 4- and 12-weeks, respectively.

H03: The two factors (treatment and age) are independent; i.e., there is no interaction effect.

Or,  4-Week, Control =

4-Week, Control =  4-Week, Cocaine =

4-Week, Cocaine =  12-Week, Control =

12-Week, Control =  12-Week, Cocaine, where each

12-Week, Cocaine, where each  represents the population mean spine density of rats at the labeled age and prenatal treatment condition.

represents the population mean spine density of rats at the labeled age and prenatal treatment condition.

Two-Way ANOVA

A two-way ANOVA is an analysis technique that quantifies how much of the variance in a sample can be accounted for by each of two categorical variables and their interactions. Note that different rats are used in each of the four experimental groups, so a standard two-way ANOVA should be used. If the same rats were used in each experimental condition, one would want to use a two-way repeated-measures ANOVA.

Step 1 is to compute group means (for each cell group), row and column means, and the grand mean (for all observations in the whole experiment):

GROUP MEANS |

|||

|

4-Week |

12-Week |

All Ages |

Control |

7 |

9.7 |

8.35 |

Cocaine |

4.9 |

4.7 |

4.8 |

All Prenatal Exposures |

5.95 |

7.2 |

6.575 |

Take a moment to define your variables

Before we go any further, we should define some variables that we'll use to complete the computations needed for the rest of the analysis:

n = number of observations in each group (here, it's 5 since there are 5 subjects per group)

N = total number of observations in the whole experiment (here, 20)

r = number of rows (here 2, one for each age group)

c = number of columns (here 2, one for each treatment substance condition

g = mean of a particular cell group g (for example,

g = mean of a particular cell group g (for example,  12-Week, Control = 9.7).

12-Week, Control = 9.7).

R = mean of a particular row R (for example,

R = mean of a particular row R (for example,  4-Week = 5.95)

4-Week = 5.95)

C = mean of a particular column C (for example,

C = mean of a particular column C (for example,  Cocaine = 4.8)

Cocaine = 4.8)

And finally, to refer to the grand mean (the overall mean of all observations in the experiment), we'll simply use the notation  (here,

(here,  = 6.575).

= 6.575).

Now that we've calculated all of our means, it would be helpful to also plot the mean data. (It's easier to understand it that way!)

Step 2 is to calculate the sum-of-squares for each individual group (SSg) using this:

![\[

SS = \sum_{i=1} (x_{i,g} - \overline{X}_g)^2

\] \[

SS = \sum_{i=1} (x_{i,g} - \overline{X}_g)^2

\]](/StatsWiki/MoreThanTwoVariables?action=AttachFile&do=get&target=latex_f69f0fdaf675e595f329938fb5a945e50c72d93d_p1.png)

where xi,g is the ith measurement for group g, and  g is the mean of group g. Remember here that when we say "group", we are referring to individual cells (not rows or columns, which we'll deal with next):

g is the mean of group g. Remember here that when we say "group", we are referring to individual cells (not rows or columns, which we'll deal with next):

For each group, this formula is implemented as follows:

4-Week Control:

{7.5, 8, 6, 7, 6.5},

4-week, control = 7

4-week, control = 7

SS4-week, control = (7.5-7)2 + (8-7)2 + (6-7)2 + (7-7)2 + (6.5-7)2 = 2.5

4-Week Cocaine:

{5.5, 3.5, 4.5, 6, 5},

4-week, cocaine = 4.9

4-week, cocaine = 4.9

SS4-week, cocaine = (5.5-4.9)2 + (3.5-4.9)2 + (4.5-4.9)2 + (6-4.9)2 + (5-4.9)2 = 3.7

12-Week Control:

{8, 10, 13, 9, 8.5},

12-week, control = 9.7

12-week, control = 9.7

SS12-week, control = (8-9.7)2 + (10-9.7)2 + (13-9.7)2 + (9-9.7)2 + (8.5-9.7)2 = 15.8

12-Week Cocaine:

{5, 4.5, 4, 6, 4},

12-week, cocaine = 4.7

12-week, cocaine = 4.7

SS12-week, cocaine = (5-4.7)2 + (4.5-4.7)2 + (4-4.7)2 + (6-4.7)2 + (4-4.7)2 = 2.8

Step 3 is to calculate the between-groups sum-of-squares (SSB):

![\[

SS_B = n \cdot \sum_{g} (\overline{X}_{g} - \overline{X})^2

\] \[

SS_B = n \cdot \sum_{g} (\overline{X}_{g} - \overline{X})^2

\]](/StatsWiki/MoreThanTwoVariables?action=AttachFile&do=get&target=latex_fbe398bc5e0bcdd916b5a33ab0662fac1026241a_p1.png)

where n is the number of observations per group,  g is the mean for group g, and

g is the mean for group g, and  is the grand mean. So...

is the grand mean. So...

SSB = n [(

4-week, control -

4-week, control -  )2 + (

)2 + (  4-week, cocaine -

4-week, cocaine -  )2 + (

)2 + (  12-week, control -

12-week, control -  )2 + (

)2 + (  12-week, cocaine -

12-week, cocaine -  )2]

)2]

= 5 [(7 - 6.575 )2 + (4.9 - 6.575)2 + (9.7 - 6.575)2 + (4.7 - 6.575)2]

= 5 [0.180625 + 2.805625 + 9.765625 + 3.515625]

= 5 [16.2675]

= 81.3375

Step 4 pertains to within-group variance. Here, we'll calculate the sum-of-squares, degrees of freedom, and mean square error within groups. First, compute the within-groups sum-of-squares (SSW) by summing the sum-of-squares you computed for each group in Step 1:

![\[

SS_W = \sum_{g} SS_g

\] \[

SS_W = \sum_{g} SS_g

\]](/StatsWiki/MoreThanTwoVariables?action=AttachFile&do=get&target=latex_4661d70fdac9a975e5dfe848b22a69a682186df1_p1.png)

where SSg is the sum-of-squares for group g. So...

SSW = SS4-week, control + SS4-week, cocaine + SS12-week, control + SS12-week, cocaine

= 2.5 + 3.7 + 15.8 + 2.8

= 24.8

Next, calculate the within-groups degrees of freedom (dfW), using this:

dfW = N - rc

where Nis the total number of observations in the experiment, r is the number of rows, and c is the number of columns. So...

dfW = N - rc

= 20 - (2 * 2)

= 16

And finally, we compute the within-groups mean square error (sW2) by dividing SSWby dfW:

sW2 = SSW / dfW

= 24.8 / 16

= 1.55

Note that SSW is also known as the "residual" or "error" since it quantifies the amount of variability after the condition means are taken into account. The degrees of freedom here are N - rc because there are N data points, but the number of means fit is r*c, giving a total of N - rc variables that are free to vary.

Step 5 pertains to row variance. Here, we'll calculate all the same stuff we did in that last step (the sum-of-squares, degrees of freedom, and mean square error), but for the rows. First, compute the row sum-of-squares (SSR) by summing the sum-of-squares you computed for each row in Step 1:

![\[

SS_R = n_R \cdot \sum_{R} (\bar{X}_R - \bar{X})^2

\] \[

SS_R = n_R \cdot \sum_{R} (\bar{X}_R - \bar{X})^2

\]](/StatsWiki/MoreThanTwoVariables?action=AttachFile&do=get&target=latex_4371ee2c74ed3cc5239c3e6ad8a8e82f4a460bd6_p1.png)

where nR is the number of observations per row,  R is the mean of row R, and

R is the mean of row R, and  is the grand mean.

is the grand mean.

SSR = nR [(

control -

control -  )2 + (

)2 + (  cocaine -

cocaine -  )2]

)2]

= 10 [(8.35 - 6.575)2 + (4.8 - 6.575)2]

= 10 [3.150625 + 3.150625]

= 10 [6.30125]

= 63.0125

dfR = r - 1, where r is the number of rows.

= 2-1

= 1

And, just as before, divide the sum-of-squares by the degrees of freedom to get the mean square error for the rows:

sR2 = SSR / dfR

= 63.0125 / 1

= 63.0125

In Step 6, we calculate all of the variance metrics again for the columns (SSC, dfC, sC2):

![\[

SS_C = n_C \cdot \sum_{C} (\overline{X}_C - \overline{X})^2

\] \[

SS_C = n_C \cdot \sum_{C} (\overline{X}_C - \overline{X})^2

\]](/StatsWiki/MoreThanTwoVariables?action=AttachFile&do=get&target=latex_b56384377f49f594db2486f1026d6fe93e38e57a_p1.png)

where nC is the number of observations per column,  C is the mean of column C, and

C is the mean of column C, and  is the grand mean. So...

is the grand mean. So...

SSC = nC (

4-week -

4-week -  )2 + (

)2 + (  12-week -

12-week -  )2]

)2]

= 10 [(5.95 - 6.575)2 + (7.2 - 6.575)2]

= 10 [0.390625 + 0.390625]

= 10 [0.78125]

= 7.8125

dfC = c - 1, where c is the number of columns.

= 2-1

= 1

sC2 = SSC / dfC

= 7.8125 / 1

= 7.8125

Step 7 is to calculate the same three variance metrics the row-column interaction (SSRC, dfRC, sRC2). To calculateSSRC, simply sum the sum-of-squares you calculated for between-groups, rows, and columns (SSB, SSR, and SSC, respectively):

SSRC = SSB - SSR - SSC

= 81.3375 - 63.0125 - 7.8125

= 10.5125

And, for dfRC ...

dfRC = (r - 1)(c - 1)

= (2-1)(2-1)

= 1

Finally, sRC2:

sRC2 = SSRC / dfRC

= 10.5125 / 1

= 10.5125

Step 8 is to calculate the total variance metrics for the experiment. As always, start by computing the sum-of-squares (SST), which is done here by summing the between-groups, within-groups, row, column, and interaction sum-of-squares you computed in steps 3-7:

SST = SSB + SSW + SSR + SSC + SSRC

= 81.3375 + 24.8 + 63.0125 + 7.8125 + 10.5125

= 187.475

dfT = N - 1, where N is the total number of observations in the experiment.

= 20-1

= 19

Step 9 is calculating the F-values (Fobt) required to determine significance. For two-way ANOVAs, you'll compute 3 F-values: one for rows, one for columns, and one for the interaction between the two. Each F-value is computed by subtracting the mean square of the relevant dimension (R, C, or RC) by the within-groups mean square:

FR = sR2 / sW2

= 63.0125 / 1.55

= 40.65323

FC = sC2 / sW2

= 7.8125 / 1.55

= 5.040323

FRC = sRC2 / sW2

= 10.5125 / 1.55

= 6.782258

Step 10 is organizing all of the above calculations into a table, along with the appropriate Fcrit values (looked up in a table like this one). Then we'll compare eachFobt value we computed to the Fcrit values we looked up to draw our conclusions:

Fcrit (1, 16) α=0.5 = 4.49

ANOVA TABLE |

||||||

Source |

SS |

df |

s2 |

Fobt |

Fcrit |

p |

rows |

63.0125 |

1 |

63.0125 |

40.65323 |

4.49 |

p < 0.05 |

columns |

7.8125 |

1 |

7.8125 |

5.040323 |

4.49 |

p < 0.05 |

r * c |

10.5125 |

1 |

10.5125 |

6.782258 |

4.49 |

p < 0.05 |

within |

24.8 |

16 |

1.55 |

-- |

-- |

-- |

total |

187.475 |

19 |

-- |

-- |

-- |

-- |

Both variables (treatment and age) are significant, as indicated by the fact that Fobt > Fcrit for both. Thus, we can reject H01 and H02 and conclude that dendritic spine density is affected by prenatal cocaine exposure and age. The interaction between the two factors (r * c) is also significant. Thus, we can also reject H03 and conclude there is a significant interaction between treatment and age.

- To further interpret these results, we can plot the group means as follows:

NOTE: Remember that the statistics provided by the ANOVA quantify the effect of each factor (in this case, treatment and age). These statistics do not compare individual condition means, such as whether 4-week control differs from 12-week control. If k = the number of groups, the number of possible comparisons is k * (k-1) / 2. In the above example, we have 4 groups, so there are (4*3)/2 = 6 possible comparisons between these group means. Statistical testing of these individual comparisons requires a post-hoc analysis that corrects for experiment-wise error rate. If all possible comparisons are of interest, the Tukey's HSD (Honestly Significant Difference) Test is commonly used.

Tukey's HSD (Honestly Significant Difference) Test

Tukey's test is a single-step, multiple-comparison statistical procedure often used in conjunction with an ANOVA to test which group means are significantly different from one another. It is used in cases where group sizes are equal (the Tukey-Kramer procedure is used if group sizes are unequal) and it compares all possible pairs of means. Note that the Tukey's test formula is very similar to that of the t-test, except that it corrects for experiment-wise error rate. (When there are multiple comparisons being made, the probability of making a type I error (rejecting a true null hypothesis) increases, so Tukey's test corrects for this.) The formula for a Tukey's test is:

![\[

q_{obt} = (Y_{A} - Y_{B}) / \sqrt{ s_{W}^2 / n}

\] \[

q_{obt} = (Y_{A} - Y_{B}) / \sqrt{ s_{W}^2 / n}

\]](/StatsWiki/MoreThanTwoVariables?action=AttachFile&do=get&target=latex_51b588f7c3115f85a29b1bd8bc32f7b8a737fe15_p1.png)

where YA is the larger of the two means being compared, YB is the smaller,sW2 is the mean squared error within, and n is the number of data points within each group . Once computed, the qobt value is compared to a q-value from the q distribution. If the qobt value is larger than the qcrit value from the distribution, the two means are significantly different.

So, if we wanted to use a Tukey's test to determine whether 4-week control significantly differs from 12-week control, we'd calculate it as follows

qobt =

12-week, control -

12-week, control -  4-week, control /

4-week, control /

= 9.7 - 7 /

= 9.7 - 7 /

= 2.7 / 0.5567764

= 4.849343

The qcrit value may be looked up in a chart (like this one) using the appropriate values for k (which represents the number of group means, so 4 here) and dfw (16). So here, qcrit (4, 16) α=0.5= 4.05. Since qobt > qcrit (4.85 > 4.05), we can conclude that the two means are, in fact, (honestly) significantly different.

Additional group comparisons reveal that spine density does not change significantly with age in the cocaine-exposed group, but is significantly greater in the control group as compared to cocaine-exposed animals at both 4 and 12 weeks of age. Thus, the effect of prenatal drug exposure is magnified with time due to a developmental increase in spine density that occurs only in the control group.

IMPORTANT NOTE: There are various post-hoc tests that can be used, and the choice of which method to apply is a controversial area in statistics. The different tests vary in how conservative they are: more conservative tests are less powerful and have a lower risk of a type I error (rejecting a true null hypothesis), however this comes at the cost of increasing the risk of a type II error (incorrectly accepting the null hypothesis). Some common methods, listed in order of decreasing power, are: Fisher’s LSD, Newman-Keuls, Tukey HSD, Bonferonni, Scheffé. The following provides some guidelines for choosing an appropriate post-hoc procedure.

If all pairwise comparisons are truly relevant, the Tukey method is recommended.

If it is most reasonable to compare all groups against a single control, then the Dunnett test is recommended.

If only a subset of the pairwise comparisons are relevant, then the Bonferroni method is often utilized for those selected comparisons. For example, in the present example one would likely not be interested in comparing the 4-week control to the 12-week cocaine group, or the 12-week control to the 4-week cocaine group.

Multiple Regression

Another analysis technique you could use is a multiple regression. In multiple regression, we find coefficients for each group such that we are able to best predict the group means. The multiple regression computes standard errors on the coefficients, meaning that we can determine if a coefficient is significantly different from zero. On this simple example, multiple regression will give identical answers to ANOVA, but in more complex cases, multiple regression is a more powerful technique that allows you to include additional nuisance predictors, that the analysis controls for before testing for significance of your independent variables.